Kindle Versions Now Available for the '70s, the '80s, the Rock Writers and the Albums

Scoring For Ranking the 70's and Writers

Ranking the '70s and Ranking the Rock Writers

Most attempts to use the charts to rank records are incomplete. Some will cite a peak position: “It went to #1.” Some will note longevity: “Twenty-six weeks on the charts.” Both rank and position are important: but getting to “most popular in its time” requires deeper analysis, and in at least those two dimensions.

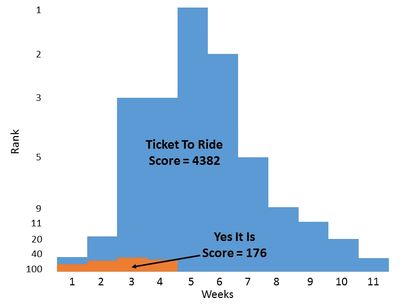

Most researchers who publish chart metrics start with the trajectory traced by a record as it rises and falls from rank to rank, week by week. These paths can be compared graphically—for example, Ticket To Ride, clearly the bigger hit, and its flip side, Yes It Is.

However, graphing the 700-odd songs that entered the charts each year in those days would be tedious, and mathematical metrics are much more convenient. Chartologists usually start the process by assigning points to ranks; the most common approach is #1 = 100 points, #2 = 99, and so on to #100 = 1. Add the points, high score wins. We call this the Reverse Rank method, and by this method, Ticket To Ride scores 939 points and Yes It Is scores 176.

Reverse Rank has flaws. The difference between #99 and #100 is one point, and so is the difference between #1 and #2, which doesn’t seem equitable. In Reverse Rank, three weeks at a mediocre #67 scores 102 points—more than one week at #1. That doesn’t seem to give enough credit for achieving high rank, and in fact, when all records are viewed using Reverse Rank, it’s clear the system favors longevity over achievement.

Score vs. chart rank

Elongating the scoring scale--#1 = 1000, #2 = 824, #3 = 658… #99 = 2, #100 = 1--makes rank achievement more important while still valuing longevity.

Applying our scoring method

Applying this scale to the Ticket To Ride vs Yes It Is comparison shows how the new scale accentuates rank performance by enhancing area.

Average score over the period

But this doesn’t totally solve the “best of all time” problem. Tastes, the record business and most importantly the charts changed over time—even over as short a time as five years. A record scoring 500 points in 1964 is not necessarily the equal of one scoring 500 points in 1968 or 1972.

In 1966, about 750 records entered the charts. By 1985, fewer than 400 did. While some of this surely derived from chart methodology, the business—selling albums vs singles and proliferation of new radio formats--was probably a larger change driver.

Since the same number of places—1-100—are awarded and scored each week, the score of an “average” record increased by nearly a factor of two over that period. Because the charts changed, direct score comparison doesn’t work. The only solution is to normalize scoring for the era in which the record charted, as only a few chartologists do.

A “best ever” list that spans a long period has to consider performance in time context. On an absolute basis, Barry Bonds hit the most home runs in a major league season: 73 in 2001 (Sammy Sosa finished second with 64). But was that the greatest performance ever?

Consider Babe Ruth’s 1920 season wherein he hit only 54, but second place was 19. The most home runs in a non-Babe Ruth season in the modern era to that point was 24. His performance was transcendent not just beyond what had been done, but what had even been imagined.

And that’s our approach to normalizing for era: look for performances that are transcendent in their time. In this case, develop a raw score for every record, then divide it by the average score of all records entering the charts plus or minus 26 weeks. Thus, an “average” record is always 1, and the score of any record is multiples of the average. A record 10 times as popular as the average in one era should be comparable to a record 10 times the average in another era, and that ratio is certainly a better comparison than raw score. To make the scores easier to read, in our books this ratio is multiplied by 1000.

The 1955-1991 era in the three major magazines, Billboard, Cash Box and Music Vendor/Record World, includes 24,426 songs, either as singles or parts of medleys. Approximately 50 entries in this database scored over 10000--10 times the average in their time--the top 0.2 percent. I consider these truly transcendent. Approximately 250 entries scored 7500 or more--the top 1 percent.

Scoring for Ranking The Albums

This book covers the records that entered the Billboard album chart from August 17, 1963 to December 31, 1989. In the 1960s, prior to August 17, 1963 Billboard had separate charts for mono and stereo albums. The mono charts were 150 records and stereo charts were 50, and I saw no reasonable way to synthesize a single chart that would be comparable to the chart that came into existence on that date. At the time of the switch, Billboard explained it adopted the new methodology because the sales of stereo albums was approaching parity with mono albums; by the end of the decade mono was virtually non-existent.

The methodology for this book begins with sales of the top ten albums by year, which could be retrieved for 1991-2019 from the Nielsen Music Year-End Reports. Sales for the top album of the year was scaled at 1000 and positions 2-10 were scaled accordingly. The rest of the positions 11-200 were calculated empirically to produce a smooth scoring curve.

To calculate scores, weekly rankings for each album were obtained from Billboard Magazine, either archived hardcopy or the website (https://www.billboard.com/charts/billboard-200). The first consolidated Mono-Stereo chart appeared as a 150-record list. On March 25, 1967, the chart was increased to 165, increased to 175 on April 8, 1967, then on May 13, 1967 the 200-record format was introduced, which remains the standard today.

A similar method to that used for normalizing singles scores is used for albums. Specifically, this means dividing the score of every record by the average score of all the records that enter the charts three months before it to one year after it. This span is arbitrary, but is intended to include sensitivity to the environment into which the record has been released, and a long tail for sensitivity to the long chart life of high scoring records.

To illustrate how different years are for average score--which is driven by the number of records that make the chart--a plot of average score by year is shown to the right. Clearly there are low scoring years and high scoring years, and normalizing puts them on an equivalent footing.

The purpose of normalizing to that era average is to remove dependencies of record score on externalities; the average at the time is an internal standard, and an “average” record always has a score of 1. Additionally, the average scores for a year should be very close to 1. Thus, a record with a score of 10 always has ten times the chart presence of an average record.

Note how using this normalization method makes the years much more equivalent.

For those who are statistically inclined, the average score of a record is not equivalent to the median score due to the skewness. The lowest score is zero, and in principle there is no highest score. Thus, the distribution is constrained on the low end. Exceptionally high scoring records will inflate the average but may have no effect at all on the median.

At the right is shown the distribution of all the albums by score.